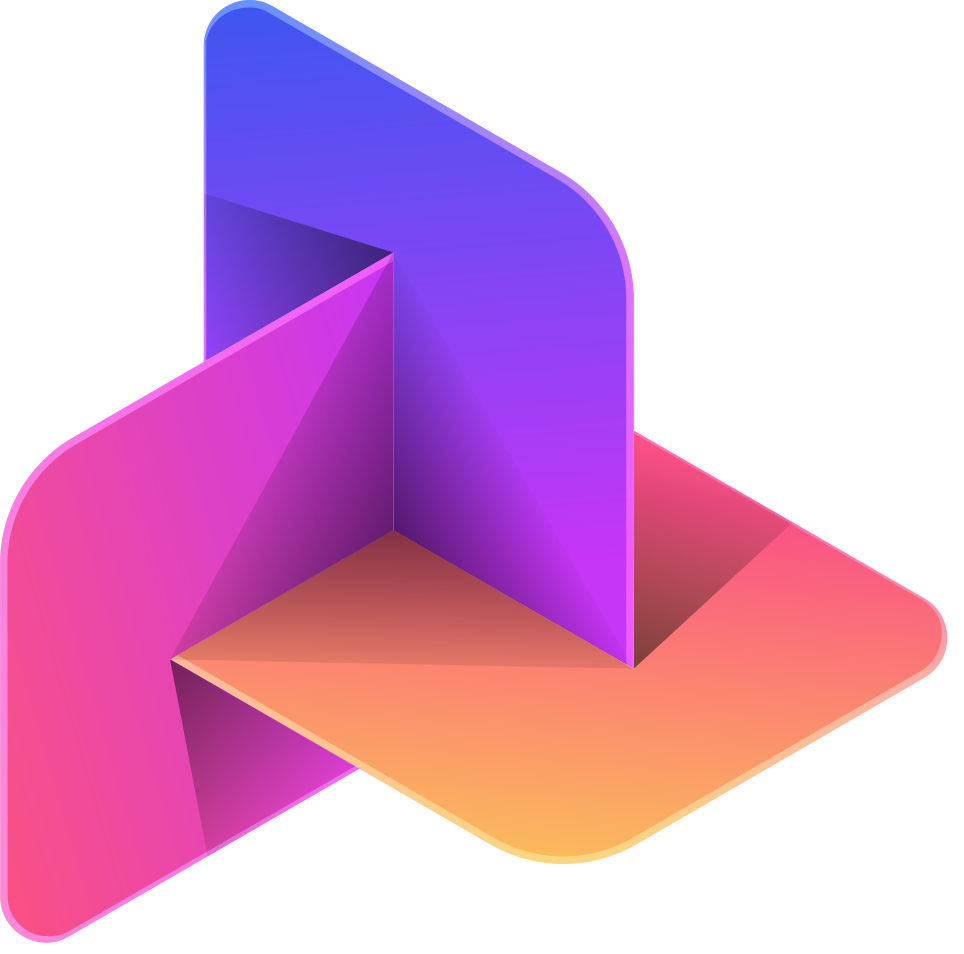

Seed Problems (EntropyMath Standard v1)

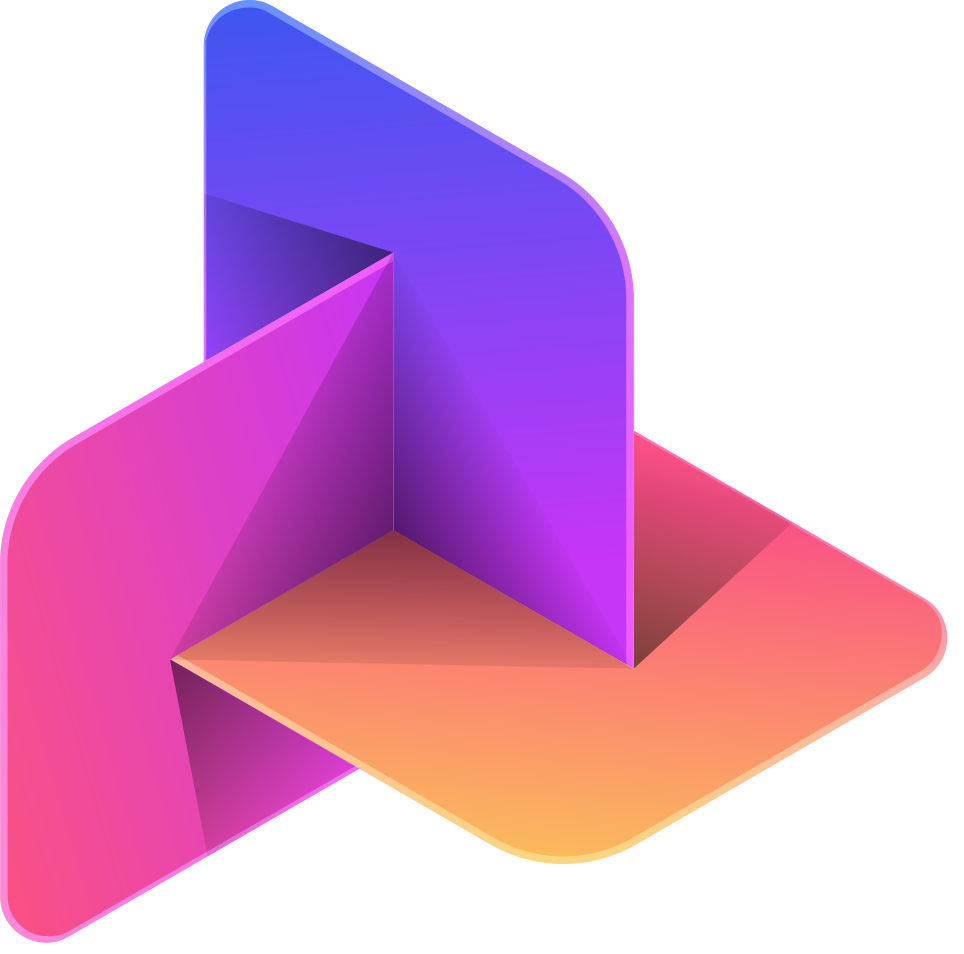

Model Accuracy vs Pass@3

100%

75%

50%

25%

0%

Grok-4.1-fast

GPT-5.1 (high)

Claude-Opus-4.5

Gemini-3-Pro-Preview

Deepseek-V3.2

GPT-oss-20B (high)

K-EXAONE-236B-A23B

Kanana-2-30B-Thinking-2601

Kanana-2-30B-Thinking

Qwen3-30B-A3B-2507

Solar-Open-100B

Deepseek-R1-distill-Qwen-32B (high)

Solar-Pro-2 (31B)(high)

EXAONE-4.0.1-32B (high)

HCX-007(high)

Gemma-3-27B

A.X-4.0 (72B)

axk1

Llama-VARCO-8B-Instruct

Accuracy

Pass@3

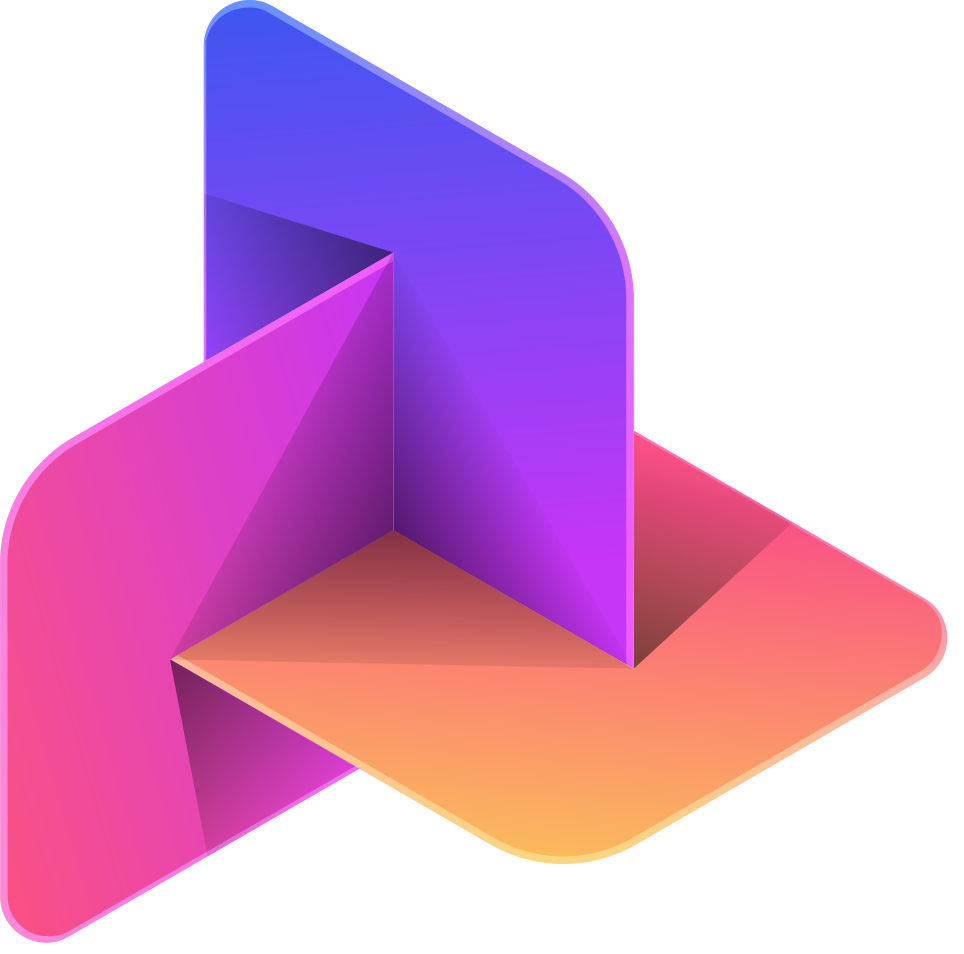

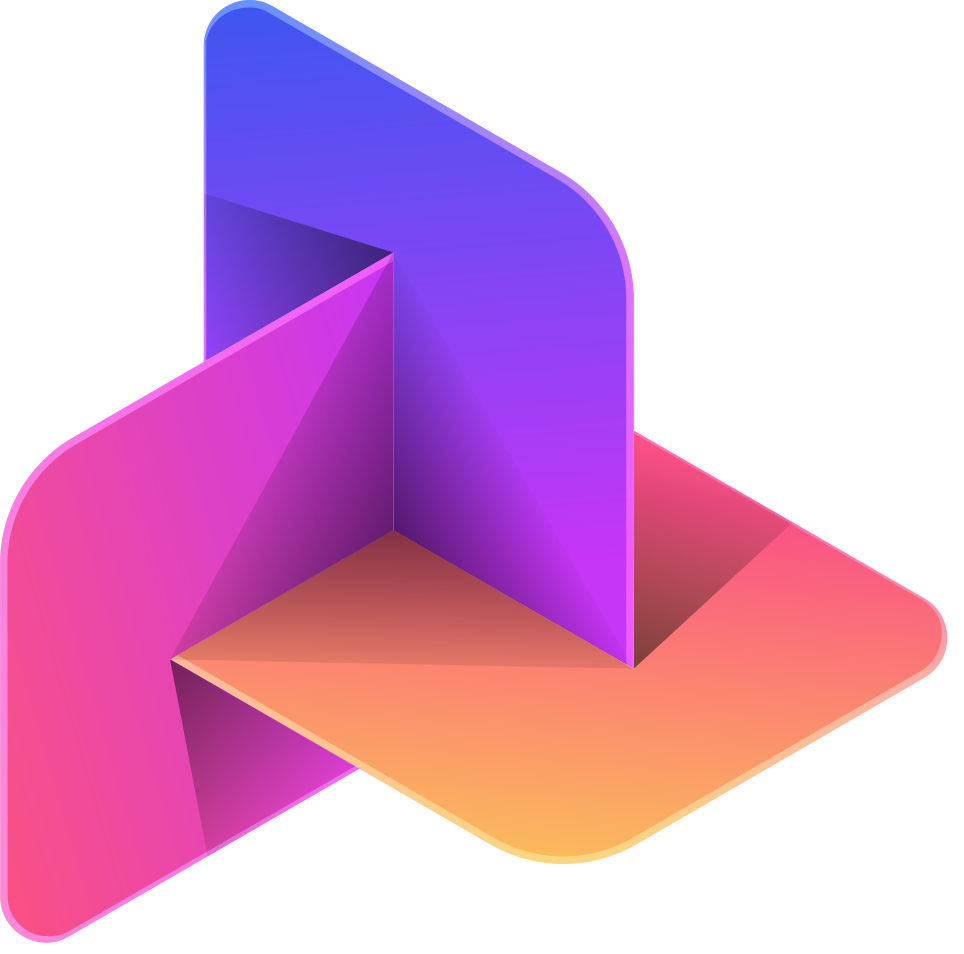

Avg Token Usage (Per Problem)

48.1K

36K

24K

12K

0

Grok-4.1-fast

GPT-oss-20B (high)

Solar-Pro-2 (31B)(high)

Solar-Open-100B

Gemini-3-Pro-Preview

K-EXAONE-236B-A23B

Kanana-2-30B-Thinking-2601

Deepseek-V3.2

Kanana-2-30B-Thinking

Deepseek-R1-distill-Qwen-32B (high)

Qwen3-30B-A3B-2507

EXAONE-4.0.1-32B (high)

Gemma-3-27B

GPT-5.1 (high)

HCX-007(high)

Llama-VARCO-8B-Instruct

Claude-Opus-4.5

A.X-4.0 (72B)

axk1

Avg Tokens / Problem

EntropyMath is an evolutionary multi-agent system and benchmark that generates high-entropy math problems designed to systematically break current LLMs. This benchmark consists of 10 curated seed problems from the MATH, serving as the foundational baseline for evaluating mathematical reasoning capabilities. Models are tested on their ability to solve these core problems which serve as the source for generating harder derivative tasks.

Results are reported using Pass@3 metrics to account for generation variance, alongside detailed execution traces for transparency.

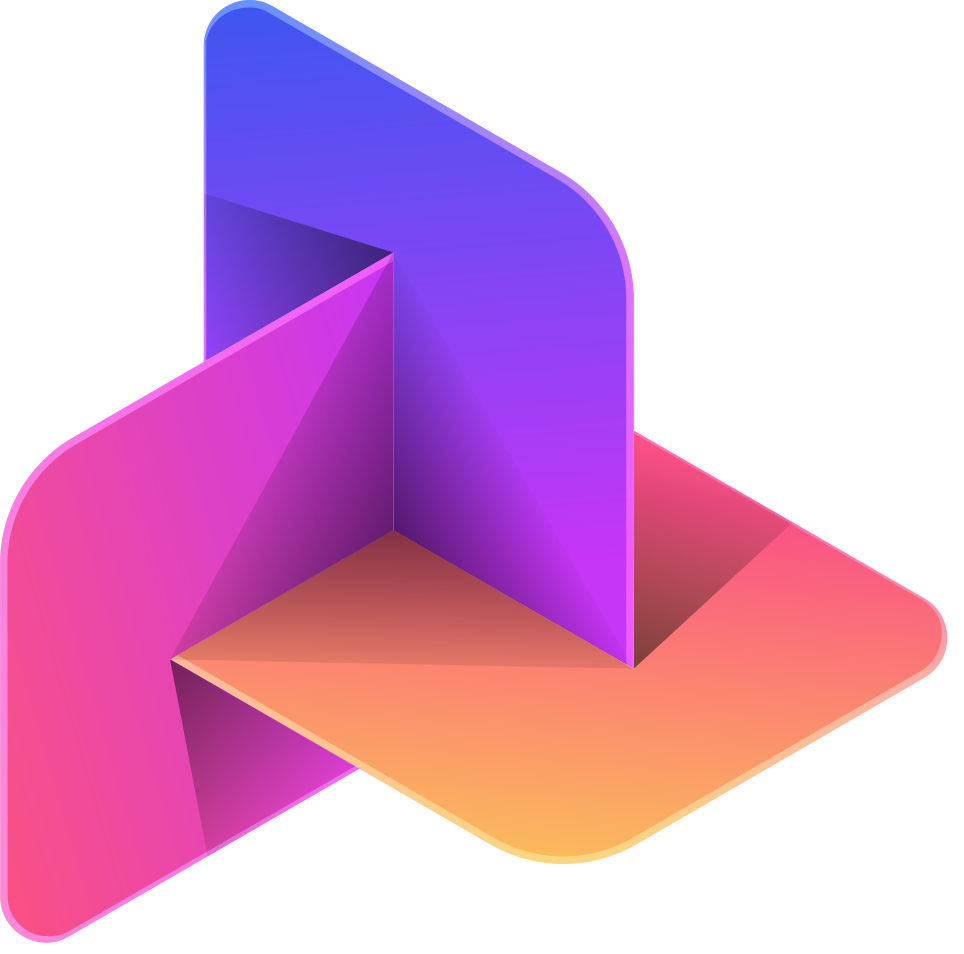

Performance Legend

Mastery (100%)

3/3

Strong (66%)

2/3

Weak (33%)

1/3

Fail (0%)

0/3

| Model | Acc | Pass@3 | 0 | 1 | 2 | 3 | 4 | 5 | 6 | 7 | 8 | 9 |

|---|---|---|---|---|---|---|---|---|---|---|---|---|

| API / Others | ||||||||||||

Grok-4.1-fast | 90.0 | 100.0 | 3/3 | 3/3 | 3/3 | 3/3 | 3/3 | 2/3 | 1/3 | 3/3 | 3/3 | 3/3 |

GPT-5.1 (high) | 86.7 | 90.0 | 2/3 | 3/3 | 3/3 | 3/3 | 3/3 | 3/3 | 0/3 | 3/3 | 3/3 | 3/3 |

Claude-Opus-4.5 | 86.2 | 90.0 | 3/3 | 3/3 | 3/3 | 3/3 | 3/3 | 1/2 | 0/3 | 3/3 | 3/3 | 3/3 |

Gemini-3-Pro-Preview | 83.3 | 90.0 | 3/3 | 3/3 | 3/3 | 3/3 | 3/3 | 1/3 | 0/3 | 3/3 | 3/3 | 3/3 |

Deepseek-V3.2 | 82.8 | 90.0 | 2/3 | 3/3 | 3/3 | 3/3 | 3/3 | 1/2 | 0/3 | 3/3 | 3/3 | 3/3 |

| K-LLM Project Round 2 | ||||||||||||

K-EXAONE-236B-A23B K-EXAONE-236B-A23B | 76.7 | 90.0 | 3/3 | 3/3 | 2/3 | 3/3 | 2/3 | 1/3 | 0/3 | 3/3 | 3/3 | 3/3 |

Solar-Open-100B Solar-Open-100B | 56.7 | 70.0 | 2/3 | 3/3 | 2/3 | 3/3 | 2/3 | 0/3 | 0/3 | 0/3 | 2/3 | 3/3 |

| K-LLM Project Round 1 | ||||||||||||

Solar-Pro-2 (31B)(high) Solar-Pro-2 (31B)(high) | 53.3 | 70.0 | 1/3 | 3/3 | 2/3 | 3/3 | 3/3 | 0/3 | 0/3 | 0/3 | 1/3 | 3/3 |

EXAONE-4.0.1-32B (high) EXAONE-4.0.1-32B (high) | 46.7 | 60.0 | 2/3 | 3/3 | 3/3 | 3/3 | 1/3 | 0/3 | 0/3 | 0/3 | 0/3 | 2/3 |

HCX-007(high) HCX-007(high) | 26.7 | 40.0 | 0/3 | 3/3 | 1/3 | 2/3 | 2/3 | 0/3 | 0/3 | 0/3 | 0/3 | 0/3 |

A.X-4.0 (72B) A.X-4.0 (72B) | 23.3 | 30.0 | 0/3 | 3/3 | 0/3 | 2/3 | 0/3 | 0/3 | 0/3 | 0/3 | 0/3 | 2/3 |

Llama-VARCO-8B-Instruct Llama-VARCO-8B-Instruct | 7.1 | 20.0 | 0/3 | 1/3 | 0/3 | 0/3 | 0/3 | 0/3 | 0/1 | 0/3 | 0/3 | 1/3 |

| Local - KR | ||||||||||||

Kanana-2-30B-Thinking-2601 Kanana-2-30B-Thinking-2601 | 63.3 | 80.0 | 3/3 | 3/3 | 1/3 | 3/3 | 3/3 | 0/3 | 0/3 | 1/3 | 2/3 | 3/3 |

Kanana-2-30B-Thinking Kanana-2-30B-Thinking | 60.0 | 80.0 | 1/3 | 3/3 | 2/3 | 3/3 | 2/3 | 0/3 | 0/3 | 2/3 | 2/3 | 3/3 |

| Local - US | ||||||||||||

GPT-oss-20B (high) | 82.1 | 80.0 | 2/3 | 3/3 | 3/3 | 3/3 | 3/3 | 0/1 | 0/3 | 3/3 | 3/3 | 3/3 |

Gemma-3-27B | 26.7 | 40.0 | 0/3 | 2/3 | 0/3 | 2/3 | 3/3 | 0/3 | 0/3 | 0/3 | 0/3 | 1/3 |

| Local - CN | ||||||||||||

Deepseek-R1-distill-Qwen-32B (high) | 56.7 | 70.0 | 1/3 | 3/3 | 3/3 | 3/3 | 3/3 | 0/3 | 0/3 | 0/3 | 1/3 | 3/3 |

Qwen3-30B-A3B-2507 | 57.7 | 50.0 | 0/3 | 3/3 | 0/3 | 3/3 | 3/3 | 0/1 | 0/3 | 0/1 | 3/3 | 3/3 |